This is a 2 part series to help take your chat to the next level. We’ll achieve this by covering:

- Optimising Chatbot design.

- Improving Natural Language Processing accuracy & installing custom code.

We get many questions about ways to increase chatbot net promoter core and it’s here: at the chatbot design that we find the 80/20 rule rears its head: so simple, inexpensive and rapid changes can make the biggest gains.

Set user expectations

One of the biggest contributors to a negative net promoter score (NPS) score is the very first moment the customer interacts with the chatbot: typically, on the live chat widget when the chatbot first says “hello”.

A quick win is to UX test whether the user is expecting a human or a robot, and set the expectations immediately.

Generally speaking, our scores increased when we told the user they’re chatting to a robot, but we immediately give them an option to contact a human if needed. It’s worth testing which phrase is best: something like: “Contact a sales rep”, “get help” or “need help”

Interestingly, by giving the user the option of talking to a human, most won’t take it. You get to increase chatbot conversion AND NPS score. Winner!

It’s easy to empathise with your user’s frustration, you have a pain you want to be solved and the first thing you see is some generic robot that provides non-relevant buttons to options you’re not looking for.

We’ve all had enough of waiting on the phone and listening to those 9 options.. (press 1 for something not relevant to you, press 2 for something still no use to you)

Experiment empathising with different emotions

It’s pretty ironic that we’re demanding our chatbot to have emotional empathy when most humans have a lot of room for improvement.

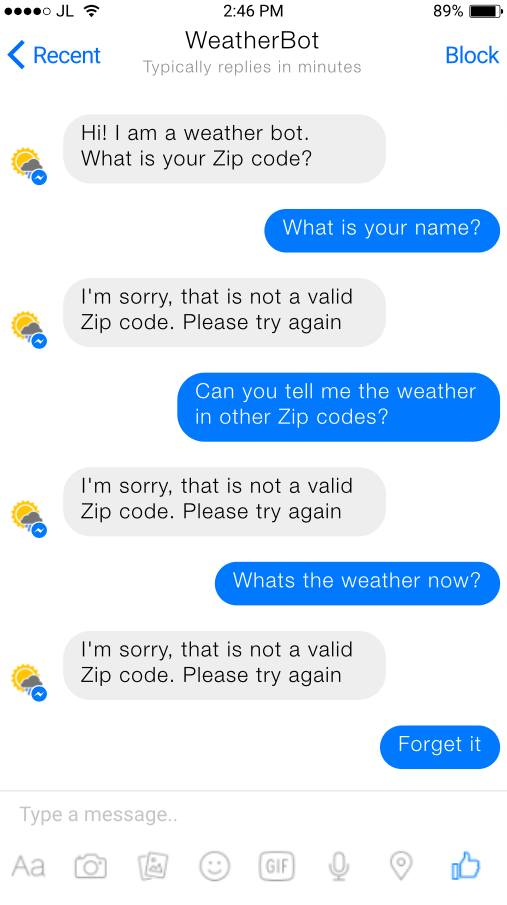

We should be doing all we can to avoid the user saying something like “This is stupid” when your chatbot fails in multiple successions. Inevitably, this will happen, and I bet your auto-response is set at:

- “Sorry, I can’t help you”

- “I don’t understand, please try again”

- “Please try rephrasing”

These responses aren’t useful and only serve to anger users more.

Instead, you can program your chatbot to detect negative sentiments and react with empathy and patience. Here are some better responses to use in these scenarios:

- “It’s understandable that you’re frustrated. Let’s try a different tactic.”

- “I’m sorry this is a frustrating experience for you. I’ll get a human customer support agent right away.”

As a general rule, however, this is going to be a rare instance. A better method altogether has the chatbot handover to a human agent as soon as the chatbot falls below is confidence level.

Which leads me nicely onto:

Increase the confidence level when you start out

When you first start out and use tools like Dialogflow, there will be a generic “confidence level” set in your NLU platform. When you first start out, it’s well worth raising the bar on this. Your chatbot will fail a lot more often and your customer service agents won’t see much difference initially, but you’ll get an influx of useful insight; learning quickly what utterances are failing.

Master the human takeover, avoid sensitive and emotional topics

When you’re also starting out, it’s highly advised the first thing you focus on is getting the human handover transition smooth and painless for your customer.

Initially, as you start out, this also includes having the chatbot handover to a human on the first intent recognition failure. Repeated failures only service to frustrate the user.

Secondly, try to avoid sensitive, emotional or highly polarising topics.

There is an exception, however:

- You put a ton of resources into making a custom sentiment analysis engine (standard NLU platform doesn’t cut it)

- You carry out deep research and testing into specific use cases to ensure you’re precise with the user pains, utterances and intents.

Mirror your user

People like people like themselves. And funnily enough, also like chatbots like themselves too.

Now granted, this is much harder to implement when you have a more generic bot or something in professional services such as banking and finance. Even so, it is possible to drill down and divide your chatbot into specific demographics, much like the one we’ve created for Deltic group nightclubs.

This does come with a rife amount of challenges:

- Overlapping intent user flows

- Duplication of work adding all of intents and entities to multiple bots

- Slang and demographic dialect research needed.

- Demographic user behaviour changes

- A whole bunch of extra backend work.

If you overcome these challenges, however, you’ll be handsomely rewarded with sky-rocketing NPS scores, retention and conversion rates.

Depending on the customer demographics and use case, use emojis, images & GIFS!

Mirroring your user also applies to how the text and communicate. Using things like GIFs and Emojis are a really good way of bringing personality and emotion across via text. An image speaks a thousand words after all.

Master small talk

Aaaah yes. The bane of every chatbot developers life. Of course, we don’t mind the stuff like: “are you real?” Or “how does this work?” Some people are even nice to your robot by asking “how are you” and saying “please” and “thank you”. (To which yes, your bot must know how to respond to)

But when you get ridiculous questions such as “Do you like [celebrity]?… That’s when I throw my toys out of the pram.

Sadly, many users see these questions as the right of passage and worthy of handling their problem and is something we must overcome.

One trick to go beyond the basic small talk templates given in NLU tools is to search for all the funny things you can ask Siri and make appropriate responses. It’s laborious and takes time, but a guaranteed way of increasing your chatbot conversion rates.

Experiment with offering guidance

Most chatbots are designed to be transactional or assist a customer in accomplishing a specific task, such as order coffee, change a flight schedule, or check a bank balance. Yet most fail to make their capabilities clear at the outset, leaving customers to guess at what’s possible.

The most common opening I see in most business chatbots today is either nothing or a vague one-liner like:

“Hi, I’m XYZ bot”

People are inherently efficient (see: lazy) and want to think a little as possible. Research shows using complex language makes people like you less.

This includes asking open-ended questions like: “What can I do for you today?”

So try experimenting with your onboarding process and offering guidance to speed up the time to resolution

That being said, people also get paralysed by too many options. The ideal mark is 3-5.

Bring onboard / upskill someone to become chatbot champion and dedicate their time to it.

At the moment, chatbots are a sub-concern, usually of the contact centre team (understandably as most chatbots are still in PoC phase). However, chatbots are becoming absolute beasts and will continue to grow as functionality increases and chatbot practices improve, (I cover this in more detail in my 2019 predictions article), intents are floating around the 150 mark and increasing.

This adds immense complexity to a conversational flow and if you’re serious about improving your net promoter score, someone is going to need to take on the analytics and testing UX full time.

Implement procedures, processes and authorisations to your chatbot dev team:

Another pain point we come across, especially as your chatbot scales and grows beyond a basic bot is user management. Let’s say you have a team of 3-5 bot builders. Everyone has a preference for how they work. This is also the case when designing intents and entities (even after formal training). As the chatbot grows, these differences will start to affect the overall UX of the chatbot, whether it’s the tone of voice or user flow.

Secondly, we’ve not seen any chatbot program have an approval process for what gets deployed. Perhaps this is because chatbots are still in their infancy. Regardless, we currently recommend all our clients to start looking into formalising this process.

Find out the most common pains and make sure you have a fast and simple route to resolution for the customer

Having a flexible design flow is important as if it’s too rigid, people get frustrated as they can’t find what they’re looking for. This being said, the opposite can be the case where if your intents are too convoluted, users will also get frustrated as it’s taking too long to find a simple solution.

There is some exciting hope for 2019 as “next best action” chatbots are starting to appear. You can find out more about this in our AlphaStar review.

Build-in intent recognition even when the chatbot is expecting an answer:

This is one of the key signs of a chatbot moving beyond basic tooling such as Chatfuel and you start using the likes of Watson and Dialogflow. But even so with these tools, it takes a particular effort to execute this. How to implement this will be expanded on in part 2!

And a word of warning, while this will dramatically improve your customer journey, it also adds a level of complexity when designing and tracking these conversations. So be sure to allocate more resources and time than you think it will take to do!

Remember Personal Details and use that to preempt answers

I understand there is a core caveat here: this isn’t possible for many chatbots as data security and privacy can create a bunch of problems.

However, if you are looking to implement preemptive answers I highly recommend reading Casey Phillips article: I’ll give credit where it’s due. The article is short but informative.

If you can, an even better scenario is to pre-empt a new user:

While this is an awesome approach, its implementation will unlikely be simple. This being said, if you can make this happen, your chatbot will really be ahead of the game and your users will thank you for removing any repetitive inputs.

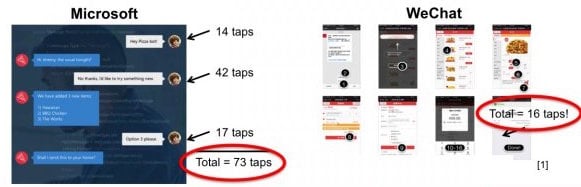

Start looking into hybrid interfaces

I wrote about this in my 2019 predictions and we’re also undertaking our own UX testing at Filament. We’ll share our results in the coming months.

But to summarise: take a look a WeChat – a perfect combination of UX and chatbots.

UX test: “How did we do?”

The consensus is: where possible, keep the conversation flow and short and to the point as possible. This usually means if we do want to collect feedback it’s in the simple form of asking “ how did we do” and slapping 1-5 buttons below.

This being said, your customers will appreciate an opportunity to tell you how chat agents could do more to help. Chatbots are great for this sort of thing as they can be more personal, varied feedback questionnaires that feel much more fluid after a conversation vs the usual email you get sent after a call.

So when you offer a post-chat survey, test to include at least one open text question that lets them weigh in. And be prepared to act—their answers are excellent for learning how agents can improve.

Also, don’t be afraid to test various ways of displaying your 1-5 buttons. I made a chatbot once where 1-5 was replaced with the different variants of smiling emojis and people loved it!

Find out when to offer chat and personalise the opening message to that page.

If you visit the Drift chatbot page, the bot changes its message. It also changes when you visit the pricing page and so on...

Drift is doing an awesome job of this at the moment. If you browse their website, their chatbot will pop up, but will ask you a specific question depending on the page you’re on.

This not only demonstrates you’re pre-empting the customer’s pain, but the customer will be more likely to engage with the chatbot because it’s being more personal and relevant compared to what we always see: “Can we help?”

This also includes testing when to NOT offer chat or when to divert the chatbot entirely and go straight to a human agent. There is no right answer and is something only extensive data-driven testing will answer.

Speaking of which:

Be data-driven and master your metrics

One of my favourite marketing brands currently is Brainlabs. They frequently mention that part of their astronomical growth (8218%!! YoY) is because any marketing they carry out is looked through and analysed with a fine comb. They do small A/B tests and make data-driven decisions.

This very much applies to chatbots too.

We’ll cover more detail about A/B testing intents and actions in part 2, but for now, we’ll cover the key metrics you should be tracking and testing:

Average session duration:

Once you’ve confirmed that your chatbot has interacted with enough users, you can start looking at fundamental stats that will give you a basic idea of usage. Average session duration is a good metric to measure, but you have to apply it to your specific industry and circumstances.

For instance, some chatbots are designed solely to answer questions and help clients. In this case, session durations should be on a shorter scale. On the other hand, bots who specialise in placing orders or telling a story should engage users in longer, more meaningful interactions.

Sessions per user

Approximately 4 in 10 people interact with a chatbot once, which may be a sign that it didn’t provide the answers for which these users were looking. Keeping an eye on the number of sessions per user is extremely important, as it could be an indication as to whether or not your chatbot is doing its job properly.

Look at the conversations where users interacted with your bot the least to establish which issues it was unable to solve. You should, however, also look at users who have had multiple sessions with your chatbot in order to see what it did right. You can then use this as a blueprint and aim to recreate this type of conversation every time someone interacts with your chatbot.

CTR (or lack of CTR if your objective is to keep them within the bot)

Although CTR isn’t currently a major metric, it will undoubtedly become one of the most important ones to look out for in the future. Remember, if the interaction is meant to be self-contained, meaning the sales/service process should be accomplished solely through your bot, high CTRs can be a major red flag.

Confusion triggers

This is a bit of a no brainer:

Not only should you think about the way your users react to your bot, but how your chatbot behaves when faced with difficult tasks and requests. There are many ways a user can make a request or ask a question; if your bot is confused, it will likely come up with a response such as “I don’t understand.” Measure the number of times your bot shows confusion triggers and analyze each case individually to discover the best way to prevent this from happening in the future.

Analyze What Customers Say

Customers know a lot about how your company could do better. Digging into this data can reveal the top things that keep customers from being your promoters. And sharing this information with your agents is one of the most powerful ways of resolving issues.

Go native & bring in the experts

Ok, it’s a bit of a plug here, but if you’ve tried all of the above and you still can’t seem to boost that net promoter score, then it’s time to bring in the team that obsesses over this. As the saying goes: hiring someone inexperienced to do the job can be the most expensive hire of all.

This step is recommended if you feel you’ve reached the physical limits of training NLU Services like Watson Assistant, Microsoft Luis or Google Dialogflow in areas such as:

- Bot-wide confidence levels (having specific confidence levels for certain areas of utterances and entities is SO much better)

- You want to start implementing the latest NLP & sentiment analysis techniques to increase accuracy.

- The current analytics are pretty limited and you need more detailed insight to make better decisions on what intents to improve next

Firstly, well done, you’re doing better than most. But to progress, you’re going to need to start building custom code, wrappers and processes, there are far better systems and NLP techniques than can be used such as:

- Using Bidirectional Encoder Representations from Transformers (BERT) for your NLU.

- Using enterprise chatbot tools such as, EBM to implement reliable authorisation processes, gain the ability to integrate in backend systems and protect your IP from NLU service providers.